In part one of this two-part series, we covered the common approaches to application modernization and key aspects of modernizing the database.

In this second part we’ll analyze the interventions to be implemented for the revision of the database with a view to modernization from the standpoint of data safety and security. The risks related to incorrect management of the database must be removed from the software with particular attention to the most dangerous aspects. So let's see some of the main rules to keep under control.

Avoid missing checks for SQL injection

SQL injection is one of the hacking techniques used for inserting and executing unexpected SQL code inside database-based web applications. This phenomenon occurs when some data is sent to a data interpreter that does not handle it correctly, allowing the hacker to access confidential information or execute commands. Within the Common Weakness Enumerations (CWEs) it is the well-known CWE-89: SQL injection, included in the OWASP Top 10: CWE-89: Improper Neutralization of Special Elements used in an SQL Command.

A SQL injection attack is extremely useful for the attacker implementing it to be able to gain extended access to the database (or software back end). This means that the cybercriminal is deeply interested in finding sensitive information, such as the list of users of a website, or financial or corporate data. In the worst-case scenario, a SQL injection hack can lead to data modification or deletion.

The attack occurs when the input passed by the user is not properly filtered, making it possible for an attacker to alter the original structure of the SQL query using special characters (for example, single and double quotes) or by concatenating of multiple constructs (for example using the SQL UNION keyword).

The prevention of a SQL injection attack can be done through a correct validation of the input (type of data, maximum length, format, content), accepting only a predefined list of allowed characters, and identifying a denylist of potentially dangerous characters. Furthermore, the use of parameterized queries and stored procedures is recommended.

Avoid procedures with Insert, Update, Delete, Create Table or Select operations without error handling

Not handling the error or not handling it correctly during any type of operation on a database, can lead to data corruption and uncontrolled application behavior with repercussions on stability and security.

For example, in SQL Server and Sybase, error handling involves the use of the @@ERROR variable or the TRY…CATCH block. In Oracle PL/SQL in the Functions and in the Procedures, the management of the error after a SQL statement foresees the use of the EXCEPTION WHEN statement.

In addition to being present, error management must also respond to further rules that we see below:

- Always use the WHEN OTHERS clause in SQL error handling

- Never use WHEN OTHER THEN NULL in SQL error handling

- Avoid “EMPTY CATCH blocks”

Do not use the WHEN OTHER clause or omit the instructions to be executed in the event of an error, it does not allow the calling procedures to intercept the error, generating unexpected behavior of the application and making troubleshooting much more onerous in the event of anomalies.

Additionally, default database error messages often include inside information that can help attackers identify a vulnerability or plan an attack. Therefore, you must ensure that all procedural code uses error handling to prevent default error messages from reaching the user.

Avoid using TRUNCATE TABLE

Although the TRUNCATE TABLE statement can be useful in some circumstances from a performance perspective, it is problematic because deleting table data is not logged in the transaction logs and therefore such an operation is not reversible, unlike the DELETE statement FROM. To avoid accidental use of this statement, one technique could be to create a dummy foreign key to the table so that the TRUNCATE operation fails.

Avoid using temporary objects (SQL-Server, Sybase T-SQL)

Triggers, Views, Functions and Procedures should not use Temporary Objects. Temporary objects can negatively impact performance and scalability. If the tempdb is full due to excess temporary objects, the server will stop working. There are some cases in which, due to complex queries or those that act on a large amount of data, it is useful to use temporary objects to split data extraction into several steps. Therefore, if you cannot help but use them, you should always know the temporary objects used by your application and take the appropriate actions to clean/remove them if they are not needed.

OK, but now what? How do you even find these deficiencies?

Of course, the challenge in obtaining all this software intelligence is that it requires a massive ‘egg hunt’ across all the layers, languages, and tens of thousands of objects that make up the application. Chances are that the original developers are no longer around to just point to them. The documentation is likely poor at best and well-intentioned architectural rules e.g., always going through a data access layer, were not always followed.

There is a way, however, to quickly derive intelligence about the mechanics of the application during the database overhaul effort. For example, CAST Imaging, automatically ‘understands’ how software applications work. As its name suggests, it then visually maps the tens of thousands of code and data elements that make up the application, and all their relationships. It acts as a living knowledge base of the software internal structures.

That allows architects, DBAs, and developers to be able to determine in minutes which objects in the overall software system are affected directly or indirectly by the changes in the database. They can literally see and quickly understand the entire existing database structure, isolate problematic triggers, stored procedures that need addressing, and see the end-to-end transactions hitting the database. One particular and detailed example of how this dramatically speeds up the database migration effort can be found in “Modernization to MongoDB Atlas on public cloud simplified with CAST software intelligence.”

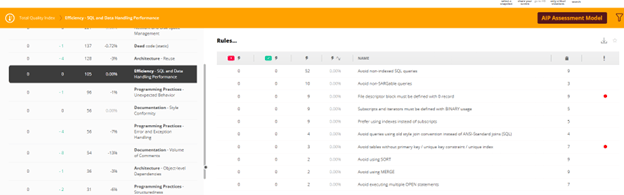

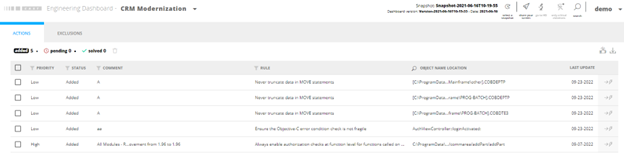

In addition, the Structural Risk Extension of CAST Imaging analyses the reverse-engineered system logic, and automatically finds structural flaws, such those described earlier in this article. It can display them right on the visual map of the application and it enumerates them, along with the recommended remediation, via its engineering dashboard.

The engineering dashboard captures violations against an exhaustive set of rules to the SQL code in different types of databases, including no-SQL ones. To do that, it requires the databases be provided for analysis in "offline" file format. CAST does not connect to the database during the analysis as the "offline" files with the database structure and the SQL code (View, Procedures, Functions, Triggers) are analyzed. This non-invasive method therefore has no impact on application performance and allows delivery by a dedicated DBA. The "offline" files can be generated by the CAST Database Extractor tool or provided directly by the application owner together with the source code.

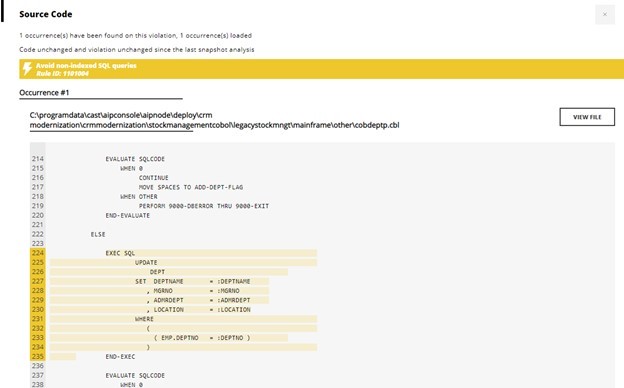

From the outcome of the analyzes carried out through the CAST Imaging engineering dashboard it is possible to carry out a detailed analysis of the critical defects through the drill-down navigation up to the source code.

Additionally, impact-optimized remediation plans are automatically suggested and can be fed into standard change request systems to address issues in the code that pose a risk to the application.

Currently, several hundred software-intensive companies and system integrators use CAST Imaging to accelerate newcomer onboarding, speed up ongoing maintenance, and de-risk modernization or cloud refactoring efforts. Architects, subject matter experts, or any developer can quickly delve into legacy code, understand existing and to-be architectures, visualize data access and API call graphs, find objects affected by database or framework replacement and candidates for decoupling or microservices, avoiding dead-ends and wrong turns.

SHARE