When entering the application security business as a new challenger, success does not come overnight. CAST’s journey into this sector began by carefully planning key milestones we needed to reach. To be recognized as a true contender in the Static Application Security Testing (SAST) space, we decided to expose CAST Application Intelligence Platform (AIP) to the OWASP Benchmark challenge.

Application Security 101: The OWASP Benchmark

If you deal with software security, you are likely already familiar with OWASP (Open Web Application Security Project), and have heard about their famous Top 10 list of vulnerabilities for web and mobile applications. As a developer, this is where you will find useful explanations and references to the security of your application.

A slightly lesser-known project aimed at helping software leaders choose application security tools is the OWASP Benchmark. A quote from their home page:

The OWASP Benchmark for Security Automation is a free and open test suite designed to evaluate the speed, coverage, and accuracy of automated software vulnerability detection tools and services . Without the ability to measure these tools, it is difficult to understand their strengths and weaknesses, and compare them to each other. Each version of the OWASP Benchmark contains thousands of test cases that are fully runnable and exploitable, each of which maps to the appropriate CWE number for that vulnerability.

As CAST’s application security solution continues to challenge incumbent players in the market, it was natural for our CAST Security team to take the next step in our journey. We decided to put ourselves to the test against the OWASP Benchmark.

OWASP Benchmark results for CAST Security

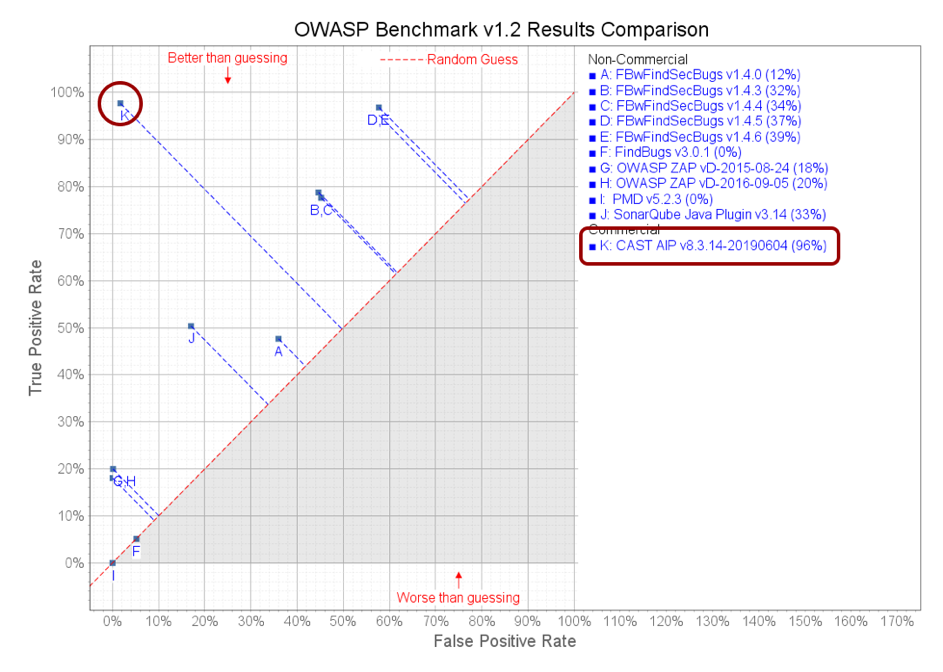

The OWASP Benchmark contains almost 2,800 unit tests covering various vulnerabilities like Injection, Cookie Management, and Encryption. When we run CAST’s SAST solution against the benchmark, we attained a score of 96%!

No, you are not dreaming! Because of CAST’s proprietary dataflow engine, we were able to achieve an outstanding 97.15% True Positive Rate (TPR), and a negligible 1.72% False Positive Rate (FPR). That means, almost every time CAST identifies a security vulnerability, OWASP agrees with our finding!

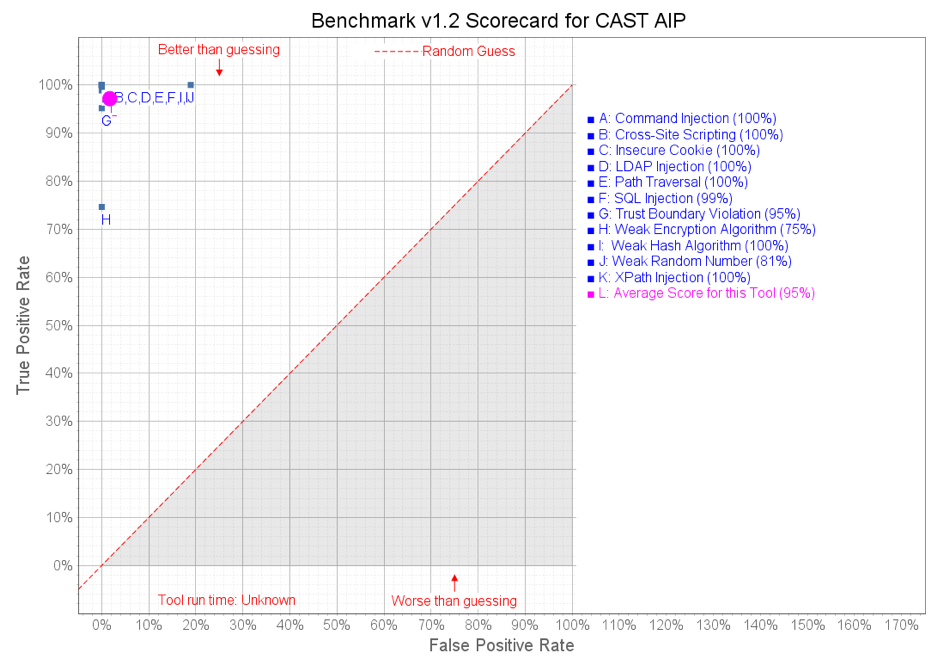

Having a look at the results per vulnerability category, we will see that we have 100% TPR and 0% FPR for Injection problem. Still some improvements regarding Encryption but it will not take a lot of time to complete this category.

How does CAST Security compare to other SAST solutions?

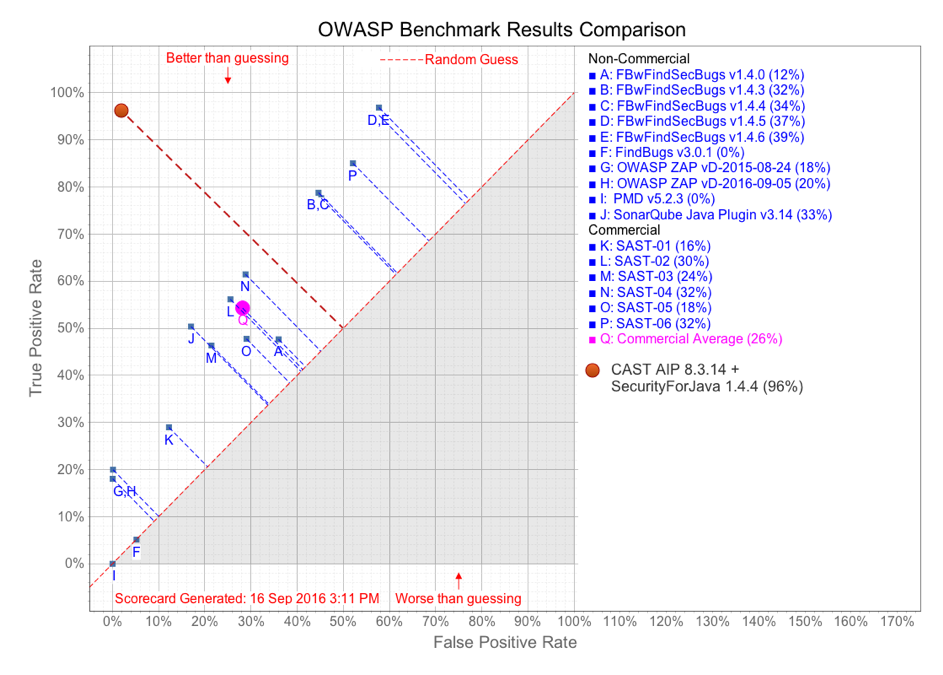

According to the official OWASP Benchmark comparison page, CAST is by far the most accurate among 17 application security tools (16 SAST and 1 DAST). Other commercial tools are anonymized using SAST-X. As you can see, the average result is only 26%--far below CAST’s impressive 96%.

Application Security: Why accuracy matters?

Most of the SAST solutions have traditionally reported as many vulnerabilities as possible, often generating results that need to be manually reviewed. This type of automated results plus manual checking process might make sense for a small application, but in a world where DevSecOps is a must, it is costly and time-consuming.

Let’s say that you use a conventional commercial solution which provides an average of 26% true positives. Let’s also say that you manage a large application with millions of lines of code. Large applications tend to generate hundreds of findings; let’s say 200. That means you have to wade through 200 findings in order find and fix 52 real vulnerabilities. Not to mention, because you’re in DevSecOps mode, your build was broken, and you now have to resubmit the code in the pipeline potentially generating another handful of false positives. Anyone would agree that this is a waste of your valuable time. This is why it is important to choose an application security solution that has high accuracy.

In conclusion, we have learnt a lot while completing the OWASP Benchmark challenge, but an even bigger challenge is waiting for us.

Application Security 301: Juliet Test Suite

Juliet is a set of unit tests like the OWASP Benchmark. It is defined by NIST (National Institute of Standards and Technology) of the United States Department of Commerce. As explained on their website:

[Juliet Suite Test is] A collection of test cases in the Java language. It contains examples organized under 112 different CWEs. Version 1.3 adds test cases for increment and decrement.

Compared to the OWASP Benchmark’s 2,800 tests, the Juliet Test Suite is, by any measure, gigantic. With more than 28,000 tests, the Juliet Test Suite is 10 times more demanding than the OWASP Benchmark. That’s quite a daunting challenge.

Juliet Benchmark results for CAST Security

As our first objective (and for the sake of consistency), we will undertake the test cases that are similar to OWASP Benchmark CWE coverage: a whopping 14,000 tests case. Juliet tests come with a comparison script including open-source and commercial products, but it will come with the same paradigms of TPR and FPR.

Let’s see how the results look like:

The initial results are extremely promising! While test cases related to HTTP Response Splitting and Process Control are not yet supported by CAST Security (they will be coming in a later release), CAST Security achieved extremely positive scores of 94.50% of TPR and 0% of FPR. Once again, we have indications that CAST’s application security capabilities are uniquely accurate.

The Juliet Test suite is in progress, and we are off to a good start!

Conclusion

Well, that concludes the first chapter of CAST Security’s journey on the path to a perfectly accurate application security solution. Stay tuned for our next chapter, when we will reveal the full results of the Juliet Test Case!

Want to find out more about CAST Security, the most accurate application security solution on the planet? Check out our OWASP Benchmark summary page which has many helpful resources on application security.

SHARE